How MindMeld is used to conserve agricultural water

(...and win hackathons in the process)

By Vijay Ramakrishnan

08/28/2019

Water scarcity is a global issue that affects billions of people. Globally, 70% of freshwater is used for agriculture and its demand is projected to increase by 15% by 2050. Technology can play key role in conserving agricultural water usage and one such opportunity is in helping farmers decide when to irrigate their crops.

At the recent Internet of Things 2019 World Hackathon in Santa Clara, Smart Ag—a team comprised of myself and four engineers from other companies—won the grand prize of $15,000 by building a smart irrigation voice assistant to aid farmers in water conservation. The hackathon was organized by BeMyApp Inc. along with the U.S. Department of Agriculture (USDA).

Traditionally, crops are irrigated on fixed time schedules—for example, at 8:00 a.m. and 5:00 p.m. These periodic schedules don’t consider changes in the soil’s moisture content caused by afternoon rain or a low-pressure system, which might mean that less water is needed for the next irrigation cycle. While a few studies that have determined the amount of water that could be conserved from smart irrigation technologies, consulting with U.S. Department of Agriculture (USDA) scientists and farmers present at the hackathon confirmed our hunch that such a technology would be very beneficial to both farmers and water conservation.

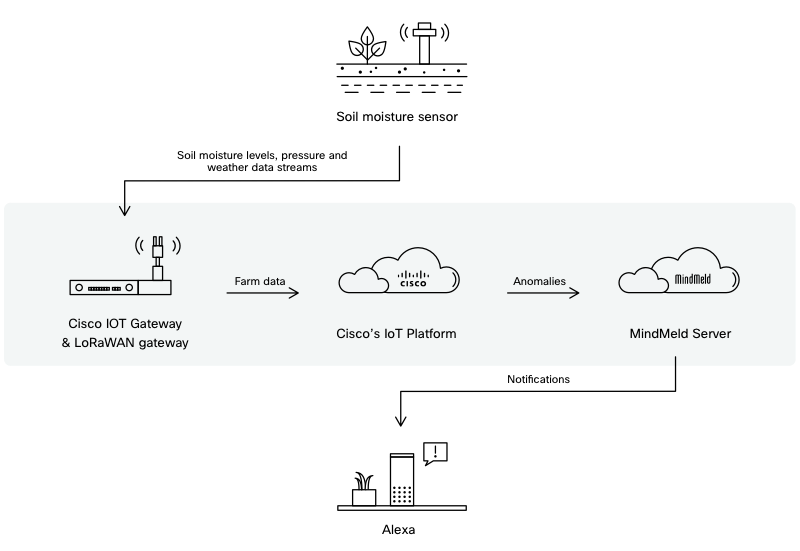

During the 48-hour hackathon, our team designed a smart irrigation voice assistant that detected soil moisture anomalies in the field using soil sensors connected to the cloud and notified the farmer, who could then ask the voice assistant about specific details of an anomalous event or general statistics of their farm. Some sample outputs from the system are “We found 27 soil moisture anomalies in irrigation track 3” and “Your moisture reading at sensor 2 of irrigation track 3 is 34.4 percent.”

Figure 1: Architecture of smart irrigation voice assistant

Figure 1: Architecture of smart irrigation voice assistant

One challenge that stood out while designing this prototype was linking the Alexa device to a deployed MindMeld server. While Alexa Skills provided a one-stop shop for developing and deploying Alexa applications connected to the device, we chose to use MindMeld due to its open-platform, which could be configured to retrieve information from a proprietary knowledge base provided by an agritech company sponsoring the hackathon. In turn, we deployed the MindMeld server with the proprietary data on an AWS instance. These proprietary datasets included soil moisture data and remote sensing data.

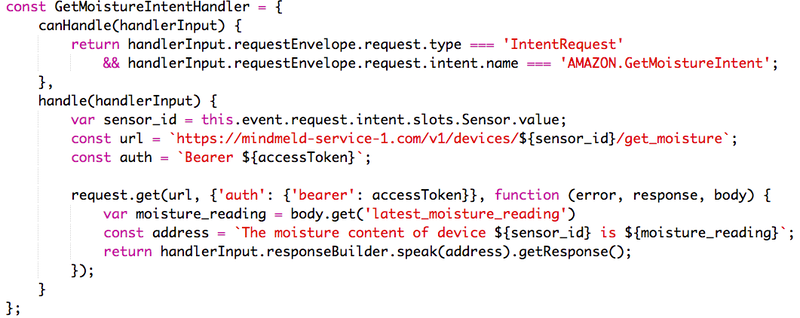

We interfaced the Alexa device with the MindMeld server using basic REST protocols. Below is an example of an Alexa handler interfacing with a MindMeld server (or any server, for that matter):

Figure 2: Get_Moisture intent dialogue handler code snipper

Figure 2: Get_Moisture intent dialogue handler code snipper

Our prototype didn’t have any complex multi-turn dialogues, but we foresee some challenges syncing Alexa Skills’ dialogue handler and MindMeld’s dialogue handler in those cases. One way to solve this is by implementing a generic dialogue handler in Alexa Skills that routes all requests (effectively a proxy) to the MindMeld server, so that dialogue handler syncing isn’t required.

Using MindMeld for the hackathon was a great way to appreciate the extensibility of the platform, which could be used to build prototypes in 48 hours while having a clear path to productionize the proof-of-concept into an enterprise application that can support high traffic and sophisticated queries.

To learn more about using MindMeld in your own projects, check out our example applications and documentation.

Vijay Ramakrishnan is a Senior Machine Learning Engineer on the Webex Intelligence team at Cisco